3dux

3d-Linux : 3d UX Design

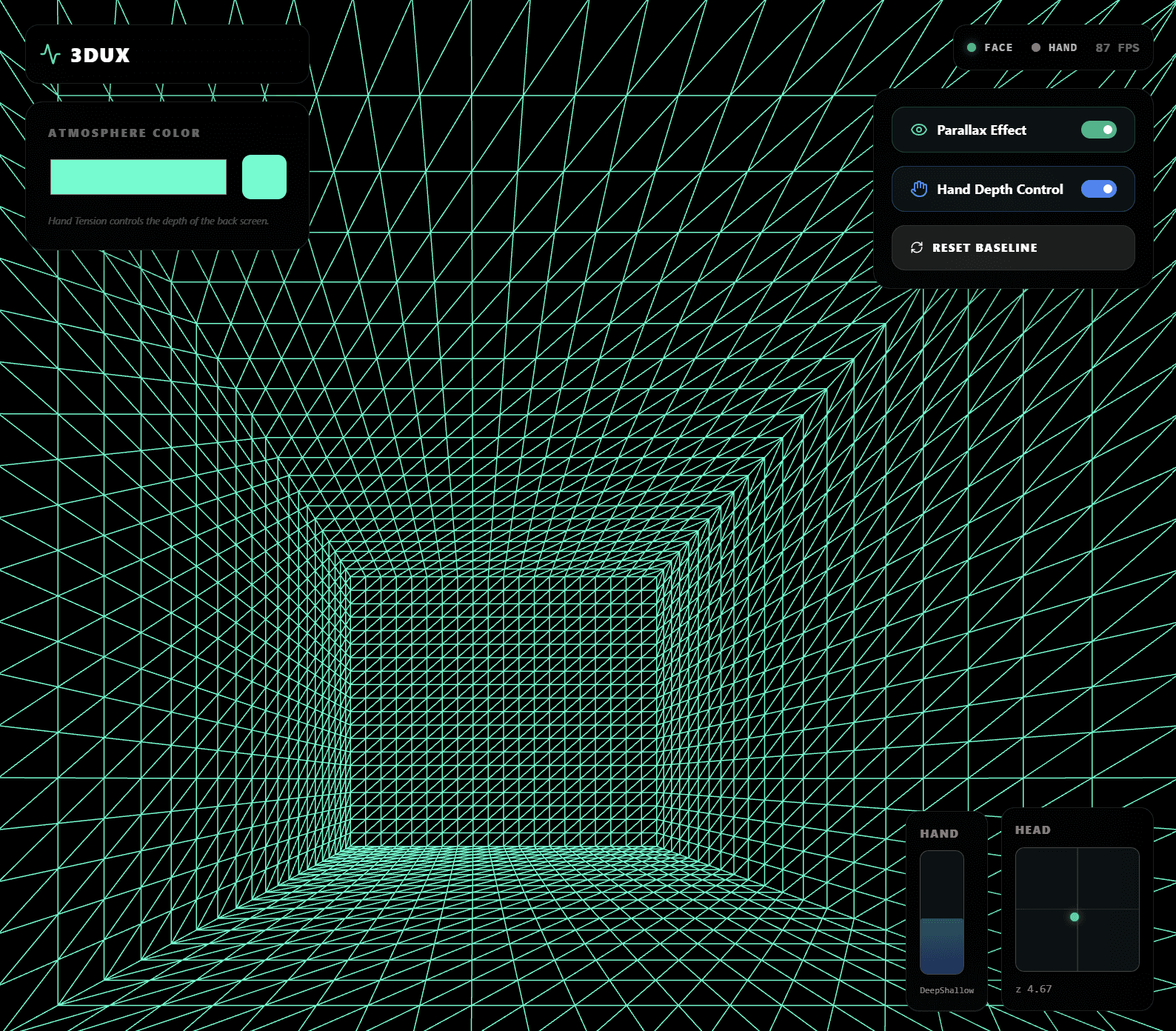

A spatial computing interface that turns a 2D screen into a depth-aware virtual window.

3dux uses head-coupled perspective and off-axis projection to explore how humans interact with computers beyond flat interfaces. Built for researchers, engineers, and explorers seeking alternatives to traditional windowed computing.

$ What is 3dux?

3dux is a spatial desktop interface prototype that reimagines how we interact with screens. Instead of a flat 2D window, it renders an inward-facing 3D workspace—a box of planes that responds to your head position in real-time.

The core innovation: perspective shifts based on the viewer's head position, creating motion parallax anchored to the physical display. No VR headset required—it runs on standard hardware.

Hand gestures modulate depth and scale, allowing intuitive control of the 3D workspace. It's a research exploration into whether spatial depth can improve focus, context, and ergonomics in everyday computing.

$ How it works

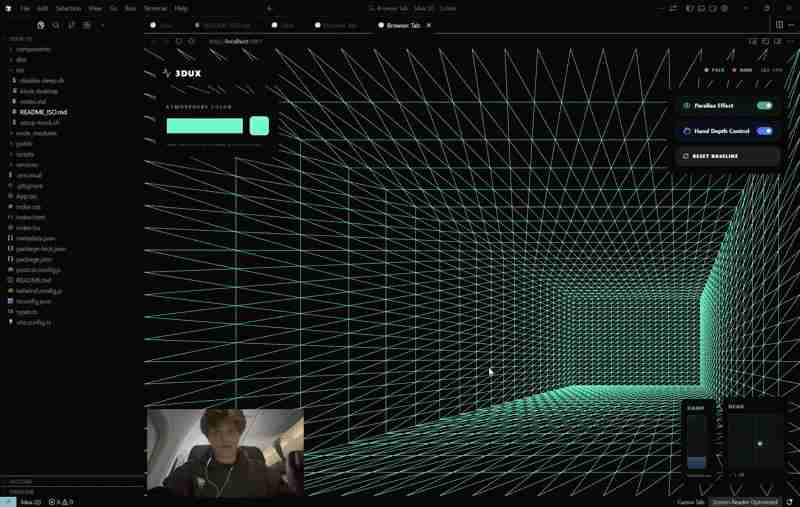

Live Demo

Head position controls the perspective. The 3D space shifts in real-time as you move, creating motion parallax anchored to your display. Video feed (lower left) shows head detection live.

Head Tracking

Computer vision (MediaPipe) estimates your head position in 3D space (x, y, z) from your webcam in real-time, 30-60fps.

Perspective Shift

A generalized (off-axis) projection frustum shifts based on your head position, creating motion parallax anchored to the display.

Hand Modulation

Hand landmarks control depth scaling and workspace transformations, enabling intuitive 3D interaction without controllers.

$ Technology stack

RENDERING

VISION

RUNTIME

PLATFORMS

Current Status: Web Demo

This demo runs in your browser. No external ML API calls—everything runs locally on your machine. Respects your privacy and enables offline operation.

Compatible with: Chrome/Edge (90+), Firefox (88+), Safari (14+)

Vision: Linux Distro

The long-term goal is to develop 3dux as a custom Linux distribution with native desktop integration, deeper OS-level optimizations, and a complete spatial computing environment.

$ Design goals

Explore alternatives to flat interfaces

Challenge the assumption that 2D windows are the only way to interact with digital content. Investigate whether spatial depth improves cognition and workflow.

Hardware agnostic

No $2000 headset or specialized equipment. Works with a laptop camera and a monitor you already own.

Open source, always

3dux is fully open source. The entire codebase, documentation, and research are publicly available on GitHub. Contributions, issues, and forks are welcome.

Prioritize clarity and reproducibility

Explainable algorithms and accessible source code. Others should be able to understand and extend the work.

Research first, product second

This is an exploration. Not a polished app or a startup pitch. Honest about limitations, assumptions, and next steps.

$ Project status

Phase 1: Web Demo (Current)

3dux is active as a browser-based research prototype. The core rendering and head-tracking pipelines are stable. Current focus is on documentation, feedback, and proof-of-concept validation.

Phase 2: Linux Distro (Working Towards)

- → Custom Linux distribution build

- → Native desktop session integration

- → Spatial window management system

- → Multi-monitor support

- → Performance optimization for lower-end hardware

- → Formal user studies